Content

- Blockchain Trilemma

- Decentralisation

- Security

- Scalability

- How the Blockchain Trilemma Manifests Itself

- Layer 2s & Rollups

- Zero Knowledge Rollups

- Optimistic Rollups

- Layer 3s

- The Lightning Network

- Recursive Proofs

- Sharding

- Danksharding

- Proto-Danksharding

- Modular Blockchains

- Risks of Scaling

- Conclusion

- About Zerocap

- DISCLAIMER

- FAQs

- What is the Blockchain Trilemma and how does it impact the scalability of blockchains?

- What are Layer 2 solutions and how do they help scale blockchains?

- What is the difference between Zero Knowledge Rollups and Optimistic Rollups?

- What are Layer 3 networks and how do they contribute to blockchain scalability?

- What is sharding and how does it help scale blockchains?

2 Jun, 23

How to Scale Blockchains

- Blockchain Trilemma

- Decentralisation

- Security

- Scalability

- How the Blockchain Trilemma Manifests Itself

- Layer 2s & Rollups

- Zero Knowledge Rollups

- Optimistic Rollups

- Layer 3s

- The Lightning Network

- Recursive Proofs

- Sharding

- Danksharding

- Proto-Danksharding

- Modular Blockchains

- Risks of Scaling

- Conclusion

- About Zerocap

- DISCLAIMER

- FAQs

- What is the Blockchain Trilemma and how does it impact the scalability of blockchains?

- What are Layer 2 solutions and how do they help scale blockchains?

- What is the difference between Zero Knowledge Rollups and Optimistic Rollups?

- What are Layer 3 networks and how do they contribute to blockchain scalability?

- What is sharding and how does it help scale blockchains?

Since the peak of the last bull cycle, scaling blockchains has been a major focus for institutions, developers and projects. With demand growing and the supply of blockspace remaining unchanged, gas fees soared into the triple figures. These costs establish barriers to entry that result in many users choosing not to transact on the blockchain; herein lies the need for scaling solutions. Responding to the growing demand for blockspace and scaling decentralised networks, many projects have proposed various approaches. This piece will examine such solutions with respect to how they work, their strengths and the often overlooked weaknesses.

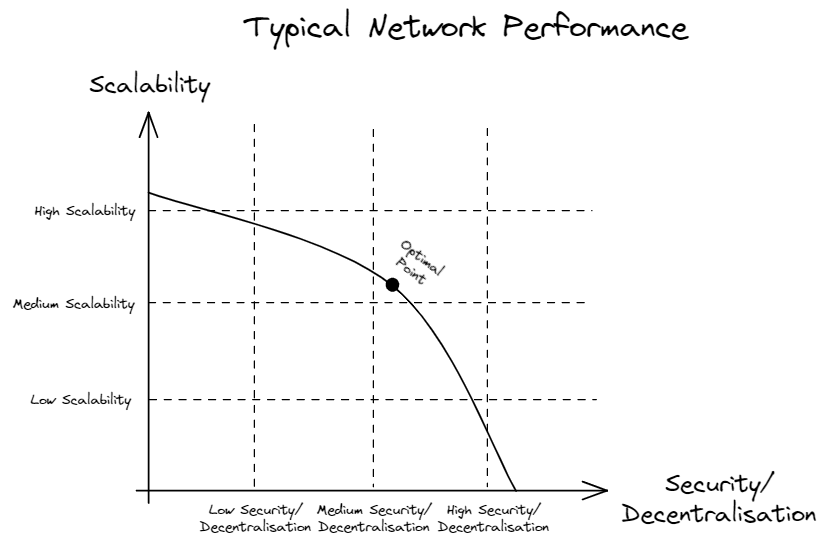

Blockchain Trilemma

Some blockchains are built to scale, whilst others focus on being highly secure or decentralised at the base layer. Currently, there is an understanding that not all scalability, security and decentralisation can concurrently be achieved by a single blockchain; this perspective is known as the Blockchain Trilemma after being coined by Vitalik Buterin, the co-founder of Ethereum. The trilemma implies that all architects of layer 1 blockchains must invariably decide to ignore one of the three prongs and prioritise the other two.

Decentralisation

Within the context of blockchains and web3, decentralisation refers to a system in which operations are facilitated by a distributed network of nodes and participants that verify transactions and reach consensus in the absence of any form of centralised governance. At the protocol level, transactions are not stored and retrieved from a server as is the case with web2 companies; rather data is stored in a peer-to-peer fashion, much like torrent files. The decentralisation of a blockchain depends on the diversity of nodes and the amount of control certain entities have over the network. The Nakamto coefficient, proposed by Balaji Srinivasan and Leland Lee, is a tool to quantify the decentralisation of a chain by considering the interconnection between nodes in a network.

Security

All computational systems require security in order to ensure that it is constantly live and safeguarded from exploits as well as attacks. A common vulnerability that networks must protect themselves against is Denial of Service (DoS) and Delegated Denial of Service (DDoS) attacks; this sees a flurry of requests sent to a server, causing it to become overwhelmed and unable to respond to genuine requests. Blockchains must defend themselves against such attacks through security mechanisms, ensuring that they can provide 100% uptime, meaning the chains are resilient without single points of failure. Thorough blockchain security results in the network obtaining liveness whereby it can guarantee that blocks will be appended to the longest chain and will not be compromised by a centralised authority.

Scalability

In order for blockchains to facilitate an exponentially increasing number of transactions, it must be capable of scaling. As such, the scalability of a blockchain is typically measured in terms of the transactions it can power per second. If a network can handle an exorbitant amount of data without halting or slowing down, it is scaling based on users’ demand. Scalability is inherently limited for blockchains in comparison to centralised servers due to the inherent difficulty in coordinating a significant number of nodes to reach consensus whereby a majority agrees on the validity of transactions.

How the Blockchain Trilemma Manifests Itself

The original blockchain, Bitcoin, prioritises decentralisation and security over scalability. Accordingly, Bitcoin is highly secure, having seen minor downtime since the mining of its Genesis Block in 2009, decentralised in reaching consensus, however, fails to handle substantial amounts of transaction data. Bitcoin can process up to 7 transactions per second, however, it often facilitates 3 transactions per second. The most dominant smart contract-enabled blockchain, Ethereum, functions in a similar manner – lauding decentralisation and security at the cost of scalability. Conversely, most alternative layer 1s, like Solana, strive to fill the gap created by Ethereum’s scalability issues, however, this comes at the expense of other properties of the Blockchain Trilemma. Solana processes, on average, 3k transactions per second, yet is arguably centralised as the Solana Foundation can push through automatic node upgrades and lacks security given the chain has fallen victim to numerous DDoS attacks in 2022.

Layer 2s & Rollups

A solution that has proven effective for the scalability of layer 1 blockchains is layer 2 networks. Layer 2s, more commonly known as rollups, offer a secondary execution environment; transactions can be executed on rollups in a less decentralised and secure manner. Whilst this might result in concerns, layer 2s purely focus on scalability. Nonetheless, in order to decentralise the settlement of transactions and secure the network, layer 2s compress these transactions into a rollup and consequently post them on the layer 1 blockchain. In the context of Ethereum, a rollup will execute thousands of transactions, compress them and settle the proof of the state changes on Ethereum. This way, rollups can herald scalability via their network whilst simultaneously obtaining the formidable decentralisation and security of the layer 1 it is founded on. As such, rollups have the capacity to assist blockchains in overcoming the Blockchain Trilemma.

Rollups are a term to describe layer 2s which compress transactions before posting a single transaction on the underlying blockchain. In this sense, although the destination and purpose of a rollup is clear, there are numerous approaches that can be taken by networks to compute their rollup. The most dominant methods are to leverage zero-knowledge proofs (ZKPs) and Optimistic proofs.

Zero Knowledge Rollups

At the core of zero knowledge rollups (ZKRs) are mathematical proofs that can establish the truth of a statement without revealing any information about the statement itself – a ZKP. As we explained in our article on ZKPs and their applications, currently, these proofs are being integrated into rollups such that they can be pushed onto Ethereum and all participants can verify their validity, without needing to store the transaction information. In this way, ZKRs can scale blockchains on a number of levels – transaction throughput will increase and the storage data requirements will decrease.

A ZKR uses a sequencer to generate a ZKP that compresses all transactions executed in its network. Due to the nature of these proofs, ZKRs can provide privacy as well as scalability; unlike Ethereum and most layer 1 blockchains, transaction details and involved parties are obfuscated from the public’s eyes through proofs. Notably, despite this information being hidden, the existence of these transactions is still executed on-chain, only using zero-knowledge circuits which can be validated by network participants.

With transactions being executed on the ZKR and the proof being posted on the layer 1 blockchain, uncertainty around where the ZKP is generated arises. Due to the difficulty in computing ZKPs without an exorbitant cost, most networks vote to use off-chain data availability and computation so as to improve their transaction throughput capacity. The scaling solutions that generate proofs off-chain are known as Validiums, not ZKRs. As a result of the tradeoffs Validiums make in regard to centralisation, these networks are currently capable of processing over 9k transactions per second.

One dominant company in the ZKR and Validium sector is StarkWare. The Israeli-based company pioneered a new zero-knowledge primitive, zero-knowledge Scalable Transparent Arguments of Knowledge (zkSTARKs), enabling its network to compute sequencers that do not require a trustless set-up – this being the major point of difference between it and other ZKPs. Having launched a permissioned and permissionless ZKR platform, respectively StarkEx and StarkNet, the company’s products have over US$ 530 million locked into its contracts and have facilitated over 300 million transactions. The importance of the scalability features that are being developed by StarkWare can be extrapolated from its recent valuation of US$ 8 billion in May of 2022; this valuation represented a quadrupling from its Series C funding round in a mere 6 months.

Founded by Matter Labs, zkSync is another popular ZKR network striving to scale Ethereum. zkSync is a general-purpose platform that leverages zero-knowledge Succinct Non-Interactive Arguments of Knowledge (zkSNARKs) to compress transactions and post rollups on Ethereum in a similar manner to StarkNet/StarkEx. This platform is further unique given its capacity to support Solidity, enabling Ethereum-based developers to seamlessly shift their focus on using the zkSync platform. Moreover, zkSync is at the forefront of zkEVMs, meaning that they are attempting to render their network compatible with the Ethereum Virtual Machine (EVM) which is responsible for executing smart contracts. In the middle of November, amidst general market uncertainty, Matter Labs raised US$ 200 million in a Series C funding round co-led by Blockchain Capital and Dragonfly to support the launch of its ZKR.

Although ZKRs are frequently placed on the pedestal as the solution for Ethereum’s inability to scale, this category of layer 2 networks faces a number of fundamental issues. In the case of zkSync and other ZKRs that leverage zkSNARKs – as opposed to StarkWare’s use of zkSTARKs – a trusted setup is required. Trusted setup ceremonies are a procedure whereby data is generated and shared between certain parties to compute future rollups. The trust feature arises because a party or a group of parties must be trusted to generate and publish the data. Furthermore, trusting a single, centralised authority (or coalition of entities) is necessary for rollups to function given the reliance on one sequencer. This sequencer is responsible for compressing transactions and generating the ZKP that will be posted on Ethereum. Eventually, ZKRs will introduce decentralised sequencers that operate in a similar fashion to nodes; however, the majority of these projects are still significantly far from achieving this feat. In the case of Validiums, centralisation becomes a more meaningful concern as proofs are generated off-chain, hence cannot be transparently viewed by network participants on-chain.

Additionally, the generation of ZKPs is computationally heavy. Despite advances in cryptographic encryption, computing proofs for larger numbers of transactions remains substantially expensive. The cost of generating ZKPs will undoubtedly drop subsequent to the implementation of Danksharding and Proto-Danksharding (explained in-depth below), the time and computation required will remain high. Moreover, although there have been numerous zkEVM announcements recently coming from the likes of Polygon Hermez, Scroll and zkSync, Ethereum’s Virtual Machine is not natively compatible with zero knowledge technology. This is a result of the developers of Ethereum not foreseeing the need for these integrations. Accordingly, as Buterin elucidated, until the EVM itself is altered via Ethereum Improvement Proposals (EIPs), ZKRs will not be able to support direct integrations from the Ethereum mainnet to its network.

Optimistic Rollups

The alternate approach to compressing transactions on a layer 2 is with Optimistic Rollups (ORs). As opposed to ZKRs that generate irrefutable proofs, ORs emerge from an optimistic viewpoint, making an assumption that the rolled-up transactions are all valid and correct. Instead of relying on proofs of validity, ORs implement a fraud-proving mechanism whereby network participants will question the veracity of rollups once it has been submitted on Ethereum. Depending on the network’s specifications, anyone can challenge the transactions compressed in a rollup via fraud proofs. In this sense, fraud proofs are the main tool used by ORs to preserve security on their network rather than purely relying on Ethereum’s security. This differs greatly from layer 1s; invalid until a majority of nodes validate a block, whereas ORs post rollups that are deemed true and correct until proven invalid.

Game theory, the study of strategic decision-making as it relates to various applications, has been deeply considered in the design of ORs. If there exists a single honest participant on the layer 2 network, they will be incentivised to safeguard the validity of the OR through fraud proofs. If a rational, honest network participant identifies an invalid transaction in a compressed batch during the challenge window, the transactions within the batch are removed such that the OR can return to a correct state. Simultaneously, disincentives are applied in the sense that an entity would not challenge a rollup if an invalid transaction does not exist as they will face a monetary penalty.

Fraud proofs are substantially cheaper than the validity proofs generated by ZKRs, ensuring that ORs can offer their users reduced gas fees for transactions. A reliance on individual participants rather than a single, computationally demanding validity proof results in fraud proofs averaging about 40k gas whilst the generation of zkSNARKs can require over 500k gas. Additionally, the approach to ensure the veracity of batches through fraud proofs serves the function of providing lower latency levels for decentralised applications (DApps).

Arbitrum, an optimistic layer 2 network founded by Offchain Labs, is currently the dominant OR in the Ethereum ecosystem. With a daily average of over 400k transactions and nearly 2 million unique addresses, many Ethereum-based DeFi protocols have leveraged Arbitrum’s EVM compatibility and growth to launch on the layer 2. This has significantly benefited the Ethereum mainnet given the sheer number of transactions moved off of the layer 1 and onto the OR. With a total value locked (TVL) into its DeFi smart contracts of over US$ 920 million, Arbitrum’s ecosystem is thriving; despite being a layer 2, Arbitrum’s TVL is superior to many popular layer 1s, including Solana and Avalanche.

Furthermore, Optimism is trailing close behind Arbitrum with a TVL of over US$ 530 million. Developed by the Optimism Foundation, the layer 2 network focuses on directing its revenue from scaling Ethereum to funding public goods, primarily open-source software that advantages the layer 1 chain. Like Arbitrum, Optimism offers developers EVM compatibility, resulting in many popular DApps, including Aave and Uniswap, launching on the network and driving their adoption; evidently, Optimism has reached over 1.8 million unique addresses. Though the foundation is now building Optimism, the company was initially known as Plasma Group; the team is evidently replete with pioneers that previously focused on Plasma as a solution to scale Ethereum, yet switched to ORs as its members became cognisant of its effectiveness over Plasma.

However, despite ORs clearly displaying effectiveness in scaling Ethereum, similar to ZKRs, these layer 2s face numerous issues. Fundamentally, all rollups are currently plagued by centralisation – an overreliance on a single sequencer with software that is not open-sourced. ORs are slightly more decentralised than ZKRs given they rely on network participants to review transaction batches and initiative fraud profs; nonetheless, only one sequencer is capable of compressing the transactions that occurred on the network. Additionally, the usability of ORs is substantially mitigated by the lengthy challenge window that results in users waiting days to withdraw their tokens. In the case of Optimism, a seven-day challenge period prevents the layer 2s users from efficiently bridging their assets to the Ethereum mainnet.

Layer 3s

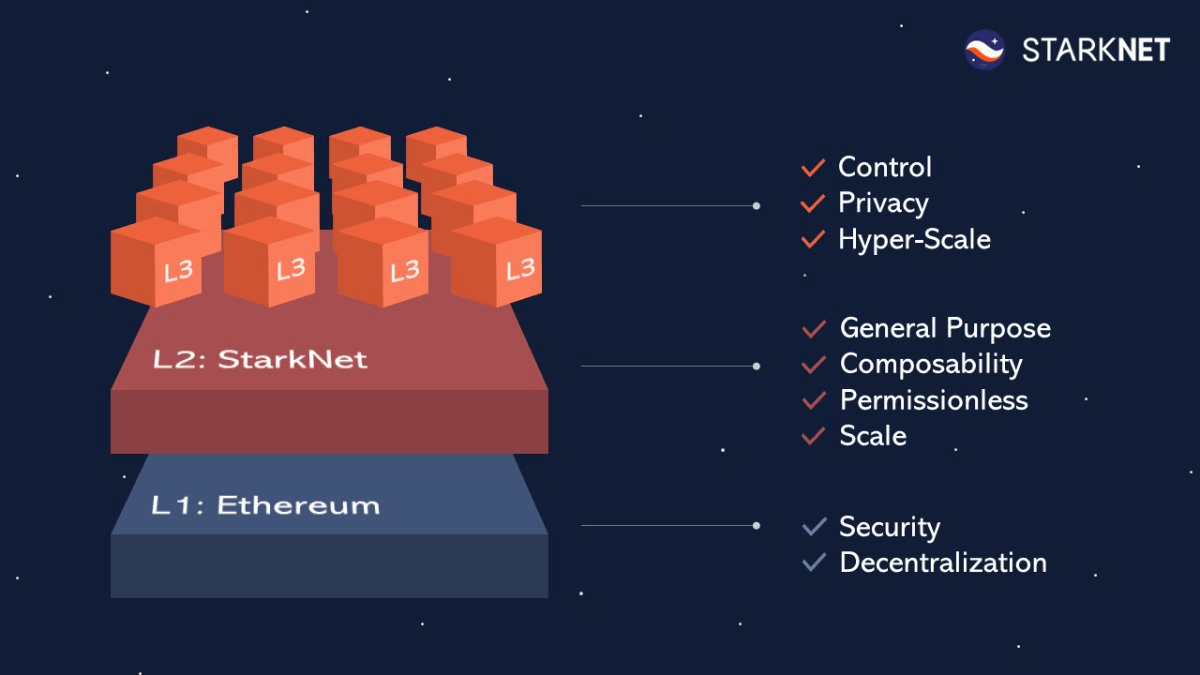

Layer 3 networks are protocols that operate on top of the underlying layer 2 scaling platform. These solutions are often referred to as “off-chain” because they operate outside of the main blockchain network, but they nonetheless interact with the blockchain through mechanisms that enable the posting of external data on layer 2 platforms. Layer 3 solutions are typically used to provide additional functionality and scalability to blockchain networks, beyond what is possible with the native layer 1 and layer 2 protocols. These solutions allow for faster, cheaper, and more scalable transactions, as well as the ability to support more complex and sophisticated use cases with significantly lower latency.

Whilst layer 2 networks focus on scaling the foundational blockchain that is likely congested, layer 3 networks provide what Buterin refers to as “customized functionality”. Specific dApps might prefer to leverage another virtual machine that differs from the EVM due to computation limitations within Ethereum’s approach to executing transactions and smart contracts. Recursive proofs, explained in depth below, are one approach being taken for layer 3 scaling solutions; according to StarkWare, recursive validity proofs can increase transaction throughput to a factor of 10 compared to normal validity proofs.

However, these simple conceptions of layer 3s often do not work out that easily due to limitations such as data availability, reliance on layer 1 bandwidth for emergency withdrawals, and more. With an asymmetric relationship between scalability and security, the basis for layer 3s is questionable; if we add another solution atop layer 2 networks, will we stop at layer 3s? Will we eventually build new layers on layer 2s? If developers can, will the scaling ever stop? Undoubtedly, when scaling is achieved, it comes at the cost of decentralisation and security. Hence, if blockchains continued to scale upwards in perpetuity, an obstacle would eventually be faced.

Source: StarkWare

The Lightning Network

The Bitcoin Lightning Network is a proposed solution for the scalability of the Bitcoin blockchain. It is a layer 2 payment protocol that enables instant, low-cost, and scalable transactions by creating a network of payment channels between users. These channels allow users to transact directly with each other without the need to broadcast every transaction to the entire network, which reduces the burden on the blockchain and increases throughput capacity.

Despite being proposed as a solution for scalability, the Lightning Network has failed to gain significant traction among users. At its peak, the Lightning Network had less than US$ 220 million in total value locked (TVL) – this number being substantially less than the US$5 billion locked into Ethereum-based layer 2 networks. One reason for this is the lack of user-friendly interfaces and wallets that make it difficult for the average user to set up and use. Additionally, the network is still relatively small and illiquid, which can lead to issues with routing payments and finding open channels. Furthermore, the network’s transaction fees are still relatively high compared to on-chain transactions, foregrounding that the Lightning Network failed to achieve its goals.

Recursive Proofs

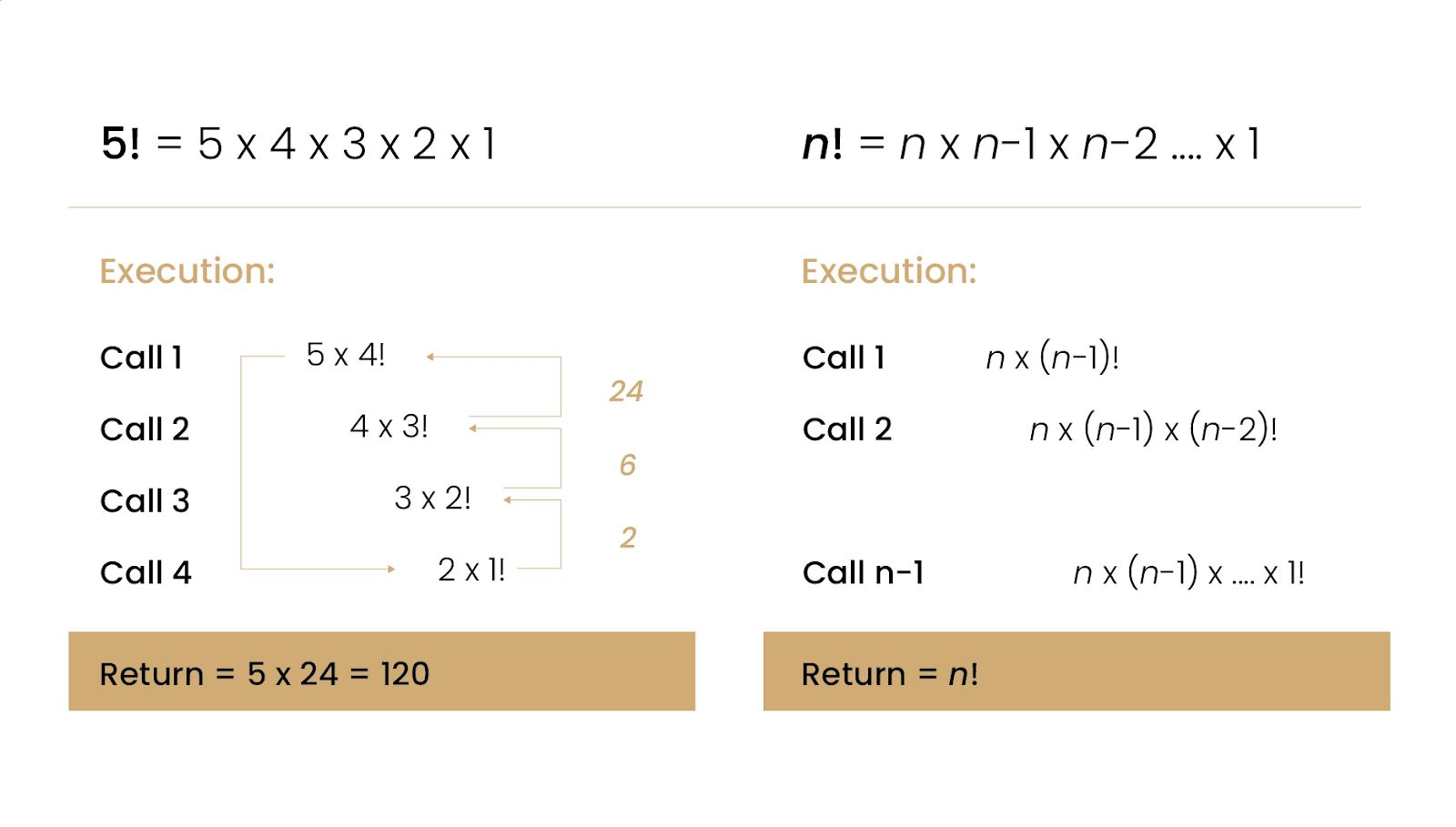

In computer science, recursion is a method of approaching problems whereby a function will call itself to resolve the same distilled problem that has nonetheless been reduced in its difficulty. Gradually, these recursive functions will take in smaller and smaller inputs until the overall problem has been resolved. A simple example of recursion is a factorial which makes the same call recursively until 1 is reached; at this point, the function will return 1 and work its way back up through prior iterations of the function.

Recursive programming is closely linked to recursive proofs; this proving technique stipulates that an input always holds true if a base case and iterative case are found to be true. These proofs can be simpler to execute, take up less storage capacity and are more efficient. Additionally, recursive proofs enable mathematicians to prove that something exists irrespective of its size and inputs.

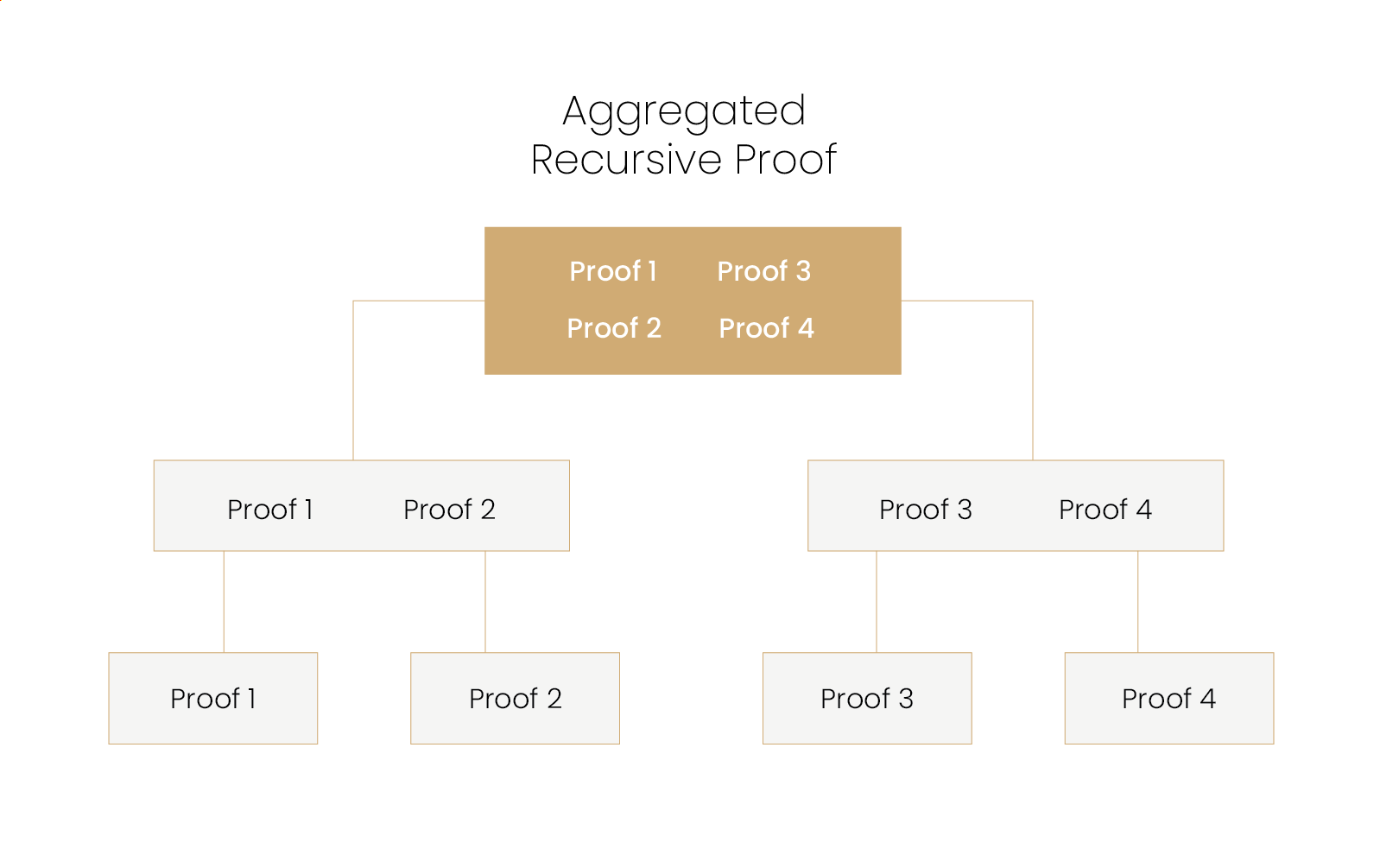

In the context of cryptocurrency, blockchains are being scaled using Zero Knowledge Recursive Proofs (ZKRPs). These proofs use recursion to dynamically generate ZKRPs which attest to the validity of past proofs. Similarly to how Merkle Trees hash and merge transactions until a root node is reached, ZKRPs recursively validate and merge previous layers of proofs. Accordingly, ZKRs can increase their capacity to scale by orders of magnitude with ZKPs within ZKPs.

StarkWare, the company behind StarkEx and StarkNet, have created the technology that generates recursive zkSTARKs. Through a method named Recursive Proving, StarkNet can generate a single proof that attests to the validity of multiple transaction rollups. The compression of multiple proofs into a single one has the capacity to enable transactors on the layer 2 network to share the costs with more parties, ergo minimising on-chain gas fees. Furthermore, StarkWare’s Recursive Proving pattern has the ability to reduce the network’s latency as the proven process can be parallelised for multiple transactions, eventually being combined into a single proof before being posted and settled on Ethereum.

Sharding

In Buterin’s piece that established the Blockchain Trilemma, he wrote that “sharding is a technique that [provides for] all three” properties. Despite Buterin, and the wider community, being cognisant of this truth, the way in which sharding will be implemented has been disputed. Fundamentally, however, sharding refers to a scaling methodology that partitions the Ethereum network into discrete segments, referred to as shards. These shards are designed to independently process and validate a specific subset of transactions, with the ultimate objective of augmenting the overall transaction throughput of the network. This method aims to enhance the scalability of the Ethereum network by increasing its capacity to process a greater number of transactions per second.

Danksharding

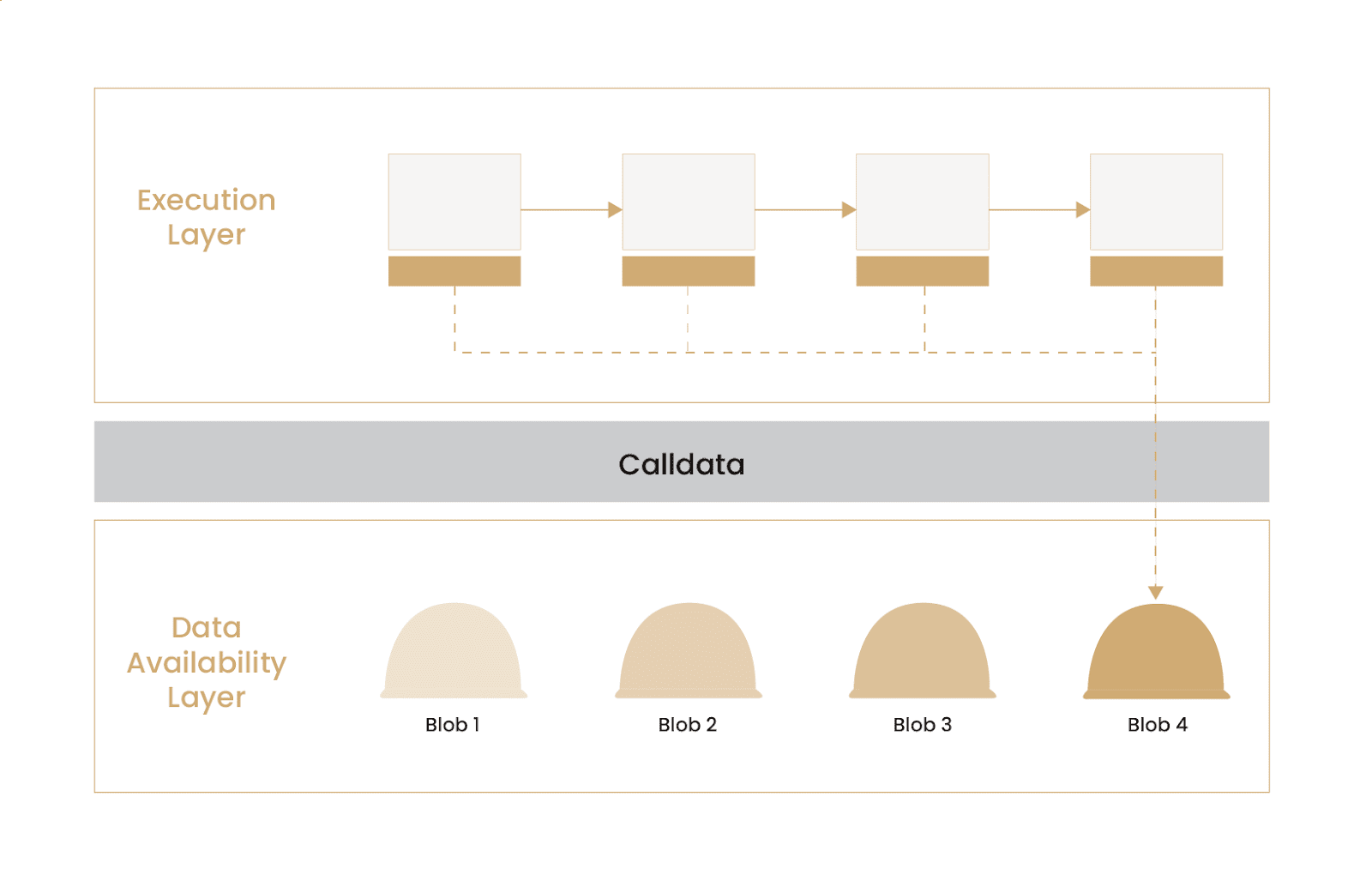

Danksharding is a novel scaling solution for the Ethereum blockchain, which is named after renowned Ethereum researcher, Dankrad Feist. This approach aims to alleviate scalability constraints by increasing the capability of the blockchain to provide sharding functionality. In contrast to other sharding methodologies, Danksharding seeks to minimise the quantity of data that needs to be stored on-chain, thereby creating additional space for binary large objects (BLOBs).

The Danksharding model is predicated on the principle that distinct shards do not require the submission of their own blocks by unique validators. Instead, each shard comprises a varying amount of data, enabling a solitary participant to propose a block that encompasses all the transactional data. This innovative concept has the potential to overcome the scalability drawbacks of layer 2 solutions which compress and post substantial amounts of data to the Ethereum mainnet, imposing a burden on all nodes to download and verify it. Danksharding breaks up the settlement and data availability layer of Ethereum, sharding data availability to facilitate the allocation of more transactional data to each block.

Proto-Danksharding

Along with the ingenuity of danksharding comes a high degree of cryptographic difficulty in implementing the scaling solution. For this reason, it is expected that danksharding will be merged into the base layer of Ethereum in 2-5 years. Accordingly, an abridged solution emerged from Diederik Loerakker, also known as Proto Lambda called proto-danksharding.

Proto-danksharding, more formally referred to as EIP-4844, introduces a new transaction format for BLOBs that results in more efficient use of storage space on the Ethereum mainnet, increasing data availability and reducing the cost of storing data on-chain. The approach is to focus purely on scaling the data availability layer, sharding the data to facilitate the allocation of more transactional data to each block. Indeed, the proposal will increase the transactional block capacity of Ethereum by more than 10 times and reduce transaction fees on Layer 2 solutions by up to 100 times.

The Ethereum Foundation was tentatively targeting the Shanghai Upgrade for the implementation of this solution, however, recently announced that EIP-4844 will go live during a future major upgrade. Nonetheless, proto-danksharding is considered a critical component of Ethereum’s roadmap and a crucial step towards the implementation of danksharding.

Zerocap looks under the hood of both proto-danksharding and danksharding in this article on the future of Ethereum.

Modular Blockchains

Modular blockchains represent a significant advancement in the field of blockchain technology, as they allow for the construction of custom-made systems that are tailored to specific use cases and requirements. Rather than being responsible for all aspects traditionally assigned to monolithic blockchains, modular blockchains are composed of a stack of independently operating layers each specialising in a single purpose they were designed to achieve. This approach has the potential to create a new latticework for the industry, in which layers coordinate to create effective, efficient, and optimised chains.

The shift from monolithic to modular blockchains also highlights the emergence of key responsibilities taken up by unique, separate layers. These layers make up the modular blockchain stack and are fundamental to ensuring that web3 can facilitate a sufficient number of transactions per second to support mainstream adoption. In this context, the blockchain trilemma can be overcome by modularity and the underlying specialisation that encourages each layer to specifically improve its offerings

Zerocap delves into the evolution of modular blockchains from monolithic blockchains in this article.

Risks of Scaling

Albeit important, scaling whilst maintaining decentralisation and security is of increased importance. Nonetheless, many developers designing blockchains ignore the asymmetrical relationship between scaling their network and its decentralisation and security due to the importance that communities and users place on higher throughput.

Blockchains often derive their security from the number of validators that are involved in reaching a consensus around the validating transactions in a block. With over 500,000 stakers validating the Ethereum blockchain, the network is highly secure; the cost of a 51% attack is exorbitant and the likelihood of the chain halting is low. However, layer 2 solutions have a smaller set of individuals ensuring the security of the network. For optimistic rollups with fraud proofs enabled, network participants are capable of raising the layer 2’s security; however, in comparison to the number of Ethereum validators, the absence of enough eyes on false transactions in batches lowers the rollup’s security. Another example of this is the frequent chain outages experienced by the scalable blockchain, Solana, in 2023. With a cost-friendly and efficient consensus mechanism focused on scaling, Solana has thus far highlighted its foundation’s prioritisation of throughput over security.

In a similar sense, the decentralisation of a blockchain network is extrapolated from factors relating to the chain’s validators, including their geographical, political, architectural and technical decentralisation. Respectively, these factors refer to the physical decentralisation of stakers, the number of individuals or entities controlling the validators, the number of physical computers validating the system and their reliance on other computational resources, including cloud storage. Though layer 1 blockchains are capable of satisfying many if not all of these facets of decentralisation, layer 2s are not as apt. Presently, all layer 2s are made up of a single, centralised sequencer that is responsible for rolling up all transactions into a batch and posting the batch on the layer 1 chain. This reliance on one sequencer is a clear indication of the centralisation risks of scaling through layer 2s. In addition, when ZKRs that utilise zkSNARKs are established, a centralised, trusted setup ceremony takes place in which cryptographic keys are created for the sequencer; this represents a severe risk in that users must rely on the trustworthiness of the teams involved within the setup ceremony.

Conclusion

It is clear that scalability is an important focus on blockchain, as evidenced by the number of teams working on unique solutions for increasing the throughput of blockchain networks. At the moment, rollup networks have been gaining traction as the expected solution for scalability limitations. Nevertheless, all layer 2s remain in their infancy and for this reason are not currently capable of alleviating all of the pressure off layer 1 blockchains; when attempts are made to do so, security and decentralisation risks arise. Through the passage of time, these solutions will be refined, offering users cheap and efficient transactions without disregarding the security and trustlessness of the blockchain.

About Zerocap

Zerocap provides digital asset liquidity and custodial services to forward-thinking investors and institutions globally. For frictionless access to digital assets with industry-leading security, contact our team at [email protected] or visit our website www.zerocap.com

DISCLAIMER

Zerocap Pty Ltd carries out regulated and unregulated activities.

Spot crypto-asset services and products offered by Zerocap are not regulated by ASIC. Zerocap Pty Ltd is registered with AUSTRAC as a DCE (digital currency exchange) service provider (DCE100635539-001).

Regulated services and products include structured products (derivatives) and funds (managed investment schemes) are available to Wholesale Clients only as per Sections 761GA and 708(10) of the Corporations Act 2001 (Cth) (Sophisticated/Wholesale Client). To serve these products, Zerocap Pty Ltd is a Corporate Authorised Representative (CAR: 001289130) of AFSL 340799

All material in this website is intended for illustrative purposes and general information only. It does not constitute financial advice nor does it take into account your investment objectives, financial situation or particular needs. You should consider the information in light of your objectives, financial situation and needs before making any decision about whether to acquire or dispose of any digital asset. Investments in digital assets can be risky and you may lose your investment. Past performance is no indication of future performance.

FAQs

What is the Blockchain Trilemma and how does it impact the scalability of blockchains?

The Blockchain Trilemma is a concept coined by Vitalik Buterin, co-founder of Ethereum, which suggests that it’s challenging for a blockchain to achieve scalability, security, and decentralization simultaneously. Blockchains like Bitcoin prioritize decentralization and security, but this comes at the cost of scalability. The Trilemma is a significant factor in the development of scaling solutions, as it necessitates a balance between these three crucial aspects.

What are Layer 2 solutions and how do they help scale blockchains?

Layer 2 solutions, also known as rollups, are secondary execution environments that offer a less decentralized and secure way of executing transactions, focusing primarily on scalability. They compress transactions into a rollup and post them on the Layer 1 blockchain, thereby leveraging the security and decentralization of the Layer 1 network while enhancing scalability. Examples of Layer 2 solutions include Zero Knowledge Rollups (ZKRs) and Optimistic Rollups (ORs).

What is the difference between Zero Knowledge Rollups and Optimistic Rollups?

Zero Knowledge Rollups (ZKRs) use mathematical proofs to establish the validity of a statement without revealing any information about the statement itself. They compress transactions and post them on the Layer 1 blockchain, providing scalability and privacy. On the other hand, Optimistic Rollups (ORs) operate on the assumption that all transactions are valid and correct. They implement a fraud-proving mechanism where network participants can challenge the validity of transactions.

What are Layer 3 networks and how do they contribute to blockchain scalability?

Layer 3 networks operate on top of Layer 2 platforms and provide additional functionality and scalability to blockchain networks. They allow for faster, cheaper, and more scalable transactions, supporting more complex and sophisticated use cases with significantly lower latency. However, these networks often face limitations such as data availability and reliance on Layer 1 bandwidth for emergency withdrawals.

What is sharding and how does it help scale blockchains?

Sharding is a scaling methodology that partitions the blockchain network into discrete segments, or shards. Each shard independently processes and validates a specific subset of transactions, thereby increasing the overall transaction throughput of the network. Sharding aims to enhance the scalability of the blockchain by increasing its capacity to process a greater number of transactions per second.

Like this article? Share

Latest Insights

Interview with Ausbiz: How Trump’s Potential Presidency Could Shape the Crypto Market

Read more in a recent interview with Jon de Wet, CIO of Zerocap, on Ausbiz TV. 23 July 2024: The crypto market has always been

Weekly Crypto Market Wrap, 22nd July 2024

Download the PDF Zerocap is a market-leading digital asset firm, providing trading, liquidity and custody to forward-thinking institutions and investors globally. To learn more, contact

What are Crypto OTC Desks and Why Should I Use One?

Cryptocurrencies have gained massive popularity over the past decade, attracting individual and institutional investors, leading to the emergence of various trading platforms and services, including

Receive Our Insights

Subscribe to receive our publications in newsletter format — the best way to stay informed about crypto asset market trends and topics.

Share

Share  Tweet

Tweet  Post

Post